Deploying Solar with BentoML

2024/04/18 | This is a joint blog post written by:

- YoungHoon Jeon, Technical Writer at Upstage

- Sherlock Xu, Content Strategy at BentoMLSolar is an advanced large language model (LLM) developed by Upstage, a fast-growing AI startup specializing in providing full-stack LLM solutions for enterprise customers in the US, Korea and Asia. We use its advanced architecture and training techniques to develop the Solar foundation model, optimized for developing custom, purpose-trained LLMs for enterprises in public, private cloud, on-premise, and on-device environments.

In particular, one of our open-sourced models, Solar 10.7B, has gathered significant attention from the developer community since its release in December 2023. Despite its compact size, the model is remarkably powerful, even when compared with larger-size models beyond 30B parameters. This makes Solar an attractive option for users who want to optimize for speed and cost efficiency without sacrificing performance.

In this blog, we will talk about how to deploy an LLM server powered by Solar and BentoML.

Before you begin

We suggest you set up a virtual environment for your project to keep your dependencies organized:

Clone the project repo and install all the dependencies.

Run the BentoML Service

The project you cloned contains a BentoML Service file service.py, which defines the serving logic of the Solar model. Let’s explore this file step by step.

It starts by importing necessary modules:

These imports are for asynchronous operations, type checking, the integration of BentoML, and a utility for supporting OpenAI-compatible endpoints. You will know more about them in the following sections.

Next, it specifies the model to use and gives it some guidelines to follow.

Then, it defines a class-based BentoML Service (bentovllm-solar-instruct-service in this example) by using the @bentoml.service decorator. We specify that it should time out after 300 seconds and use one GPU of type "nvidia-l4" on BentoCloud.

The @openai_endpoints decorator from bentovllm_openai.utils (available here) provides OpenAI-compatible endpoints (chat/completions and completions), allowing you to interact with it as if it were an OpenAI service itself.

Within the class, there is an LLM engine using vLLM as the backend option, which is a fast and easy-to-use open-source library for LLM inference and serving. The engine specifies the model and how many tokens it should generate.

Finally, we have an API method using @bentoml.api. It serves as the primary interface for processing input prompts and streaming back generated text.

To run this project with bentoml serve, you need a NVIDIA GPU with at least 16G VRAM.

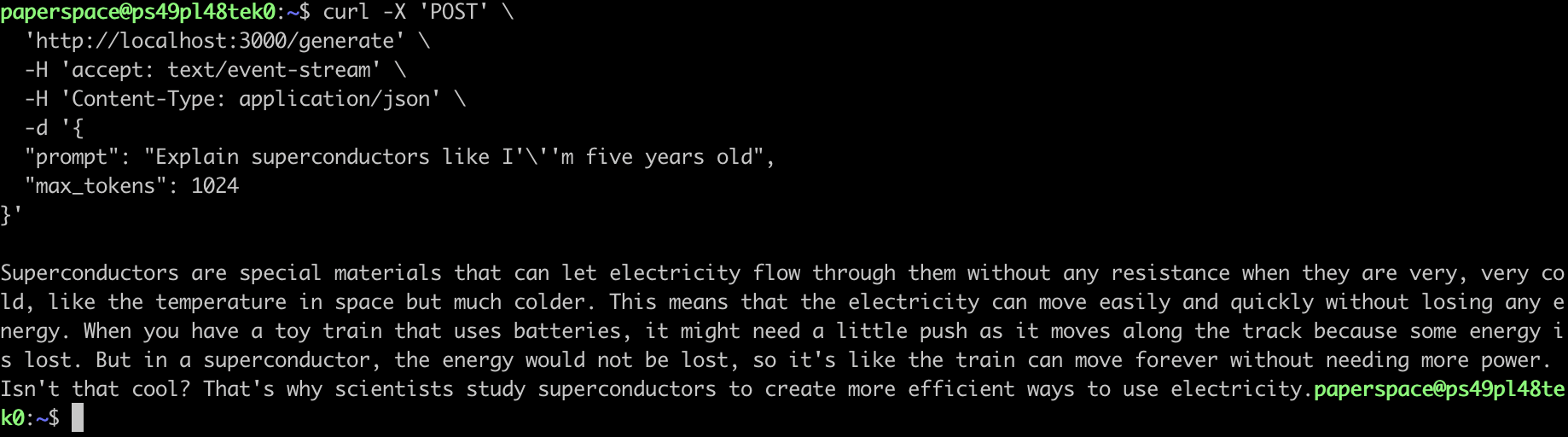

The server will be active at http://localhost:3000. You can communicate with it by using the curl command:

Alternatively, you can use OpenAI-compatible endpoints:

Deploying Solar to BentoCloud

Deploying LLMs in production often requires significant computational resources, particularly GPUs, which may not be available on local machines. Therefore, you can use BentoCloud, an AI Inference Platform for enterprise AI teams. It provides blazing fast auto-scaling and cold-start with fully-managed infrastructure for reliability and scalability.

Before you can deploy Solar to BentoCloud, you'll need to sign up and log in to BentoCloud.

With your BentoCloud account ready, navigate to the project's directory, then run:

Once the deployment is complete, you can interact with it on the BentoCloud console:

Observability metrics:

BentoML seamlessly integrates with a wide array of ML frameworks, simplifying the process of configuring environments across diverse in-house ML platforms. With its notable compatibility with leading frameworks such as Scikit-Learn, PyTorch, Tensorflow, Keras, FastAI, XGBoost, LightGBM, and CoreML, serving models becomes a breeze. Moreover, its multi-model functionality enables the amalgamation of results from models generated in different frameworks, catering to various business contexts or the backgrounds of model developers.

More on Solar and BentoML

To learn more about Solar by Upstage and BentoML, check out the following resources:

Research: Solar Depth Up-Scaling

Blog: Using RAG with Solar

BentoCloud is an AI Inference Platform for enterprise AI teams. Sign up now and get free credits!